"Even AI gotta practice clonin' Kendrick / The double entendre, the encore remnants." - Kendrick Lamar, America Has A Problem (Remix).

love all my supporters it’s time

It’s been just over two weeks since Playboi Carti dropped his latest album. Since his last, we've seen more than four years of pushed-back dates, scrapped rollouts, and straight up lies. MUSIC is him thanking the world for the wait.

For those of you blissfully unaware of his existence, Playboi Carti is a rapper from Atlanta, a graduate of the SoundCloud rap scene, pioneer of the punk rap and rage sub-genres, and the definition of a dichotomous artist. Boundary-breaking or music-breaking? Depends on who you ask, but either way, it’s a bit strange for someone so known for his unparalleled creativity to be called out for using AI vocals. And, worse, this isn’t even the first time! But how did we get here?

D33pL34rning

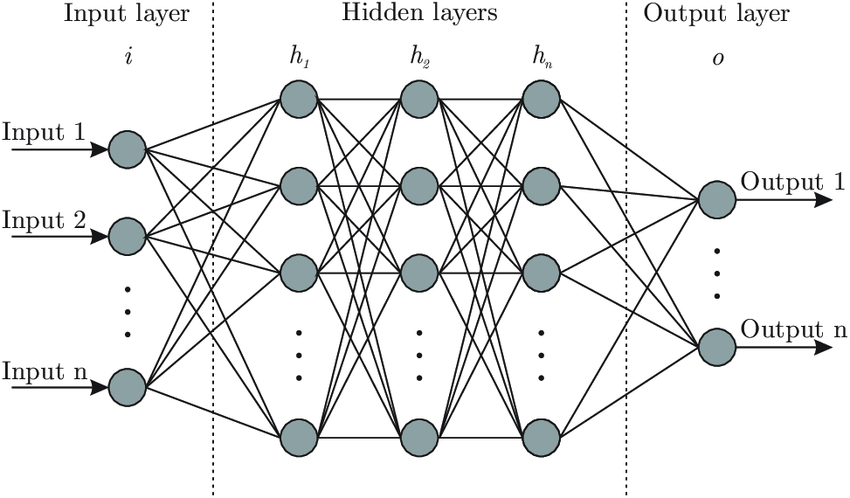

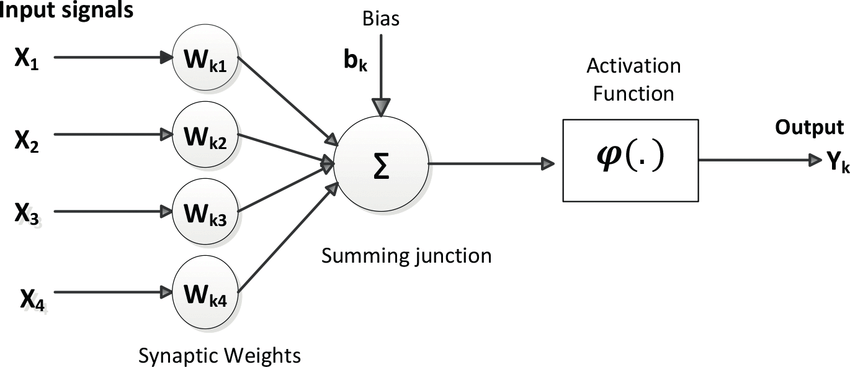

As with all AI worth worrying about, voice synthesis starts with a neural network. (And believe it or not, we have a brilliant article on neural networks here!) For most modern synthesisers, deep learning trains them on many hours of vocals by breaking down the speech into core pronunciations, to identify patterns and trends in speech. When the “voice” is output, it should replicate the intonations and flows of a human’s speech near-perfectly.

More advanced models, such as Meta’s Voicebox, use techniques such as non-deterministic mapping, which essentially allows for unlabelled variations to be fed as input, and implicit types and patterns in speech to be learnt from, instead of rigid homogenous maps. Voicebox itself can mimic a human voice with just two seconds of audio.

It wasn’t always this way. Voice synthesis, like early versions of Siri or an old sat-nav, was originally the arduous task of the respective algorithm chopping and splicing together thousands of lines of recorded human audio. Since then, it has improved exponentially. Today, a simple Google search and you can get Winston Churchill to sing “Espresso”.

No Auto?

The claims are that Carti used an AI vocal overlay to morph his vocals into the flow and cadence of another musician, Lawson, on two tracks on MUSIC (“Rather Lie” and “Fine Shit”) and his feature on the Weeknd’s recent single “Timeless”. A video I could only find on the platform formerly known as Twitter presents “indisputable proof” of this, by comparing vocals which seemingly did not change at all between the (leaked) demo and release tracks.

Now, does this actually indisputably prove anything? Probably not. Do I care if he used AI on his own album? Well, this article wasn’t written to point fingers. I only care about the question of whether artistic integrity can be maintained when using AI, here in the context of music.

As with every new technology, one could argue it gives musicians the opportunities to further push creative boundaries. In 1997, Autotune was pitched as the answer to off-key singing. Jump forward 10 years and it’s the norm to disparage performances and the artists using it. But jump ahead another 10 years, and Autotune’s critics are yelling extremely out-of-tune with the current taste. Now, and back in 2017, autotune is everywhere. Modern pop would be unrecognisable without it. Plus, it spawned some brilliant new genres, such as hyperpop and melodic rap. Autotune is not a question of “yes” or “no” anymore, rather it’s “how much?”...

...though, this pivot could occur only after musical visionaries unlocked its potential for the world to appreciate. Think Daft Punk, T-Pain, Future, Charli XCX. Could this happen with AI? DJ David Guetta thinks so, and he describes it as just another “modern instrument” artists can now use to express a vision or feeling.

He is all but vindicated by the countless AI-generated tracks of artists performing lyrics they didn’t write or feel.

TUNDRA

Another may argue that generative AI, as it remains, is ontologically destructive to creativity. While this view may seem alarmist, in the mainstream music sphere at least, AI vocals have only ever been used to imitate existing artists or older sounds, and not to cultivate a brand-new sound like with Autotune, or the synths, or drum machines, or samplers, before it. This is exactly why Carti’s new tracks sit in a grey area. It may be him, yet is it actually him if he’s using not just another rapper’s flow, but their voice too? Could it be a post-modern execution of songwriters using vocalists to express their vision?

Legally, the future looks challenging, if not bleak and barren. Musicians are taking muted protest. New amendments to UK government copyright laws would allow AI models to scrape internet content (including music) for training by default, unless the creator opts out. In response, over 1,000 musicians collaborated to release an album with no vocals or instruments just this February. Instead, you only the sounds and noises of everyday life.

Meanwhile, their record labels have moved fast, firing lawsuits and consistent lobbying for stricter artistic protection laws, but I do believe this speed is motivated by economics and not ethics. Find a method of using AI in music that is profitable for them and rest assured they will be the first to change their proverbial tune.

Further reading / references

A. Downie and M. Hayes, “AI voice,” IBM, Jan. 23, 2025. https://www.ibm.com/think/topics/ai-voice

I. Parkel, “AI-generated song mimicking Drake and The Weeknd submitted for Grammy consideration,” The Independent, Sep. 07, 2023. https://www.independent.co.uk/arts-entertainment/music/news/drake-and-weeknd-ai-song-heart-on-my-sleeve-b2406902.html

L. Kuenssberg, “Sir Paul McCartney: Don’t let AI rip off musicians,” BBC News, Jan. 25, 2025. Available: https://www.bbc.co.uk/news/articles/c8xqv9g8442o

A. Owen, “Now and Then: The Use of AI on the ‘Final’ Beatles’ Song,” The Saint, Nov. 09, 2023. https://www.thesaint.scot/post/now-and-then-the-use-of-ai-on-the-final-beatles-song